The way people discover information online is undergoing a fundamental shift. A mid-2024 survey showed that roughly 31% of Gen Z internet users consider ChatGPT and other AI tools in their top 3 methods of searching for information. As an SEO professional, I’ve seen this shift firsthand while helping a 60-year-old non-profit modernize their digital presence as they transition to a headless CMS architecture. Ensuring visibility in LLMs has become a critical consideration for their digital transformation. The fascinating part? Being indexed and referenced by LLMs requires a completely different approach than traditional SEO. Let’s dive into what it takes to make your content truly LLM-friendly, exploring the technical challenges and opportunities this new frontier presents.

Understanding the LLM Content Discovery Ecosystem

When I first started exploring how LLMs discover and reference content, I assumed it would be similar to traditional search engines. The reality turned out to be far more complex and fascinating. Let me share what I’ve learned about the unique way LLMs source and process information.

First, let’s talk about how LLMs find your content in the first place. Unlike Google, which actively crawls the web and updates its index regularly, most LLMs are trained on specific datasets with periodic updates. This means your content needs to be both discoverable and valuable enough to make it into these training sets. Claude, ChatGPT, and other major LLMs typically source their data from Common Crawl, academic databases, and other curated collections.

The implications? Your content’s authority and format matter more than ever. Traditional SEO signals like backlinks still play a role, but LLMs place greater emphasis on semantic relationships and contextual relevance. They’re particularly good at understanding natural language patterns and identifying high-quality, informative content that answers specific questions comprehensively.

A key challenge in modern web development is the impact of JavaScript-heavy architectures on LLM visibility. While modern search engines like Google have become quite proficient at rendering JavaScript, many LLM training crawlers struggle with it. This means that content buried in JavaScript or loaded dynamically might be invisible to LLMs, even if it’s perfectly accessible to human visitors and traditional search engines. With everything in AI, changes can happen quickly, and this might be a challenge of the past before we know it.

LLMs seem to favor content that demonstrates clear expertise and comprehensive coverage of a topic. This isn’t news to people in the SEO community, in fact, Google has been shifting in this direction for quite some time. In my research, I found that detailed, well-structured content with clear hierarchical relationships performed better in LLM responses. This isn’t just about keyword density or meta data anymore; it’s about creating content that fits naturally into the vast knowledge graph that LLMs use to generate responses.

The authority signals that matter to LLMs are also quite different from traditional SEO. While Google might look at domain authority and backlink profiles, LLMs appear to place more weight on the internal coherence and informativeness of the content itself. They’re remarkably good at identifying content that provides genuine value versus content that’s simply been optimized for search engines.

What does this mean for content creators? Your focus should be on creating clear, comprehensive, and well-structured content that demonstrates real expertise. Use semantic HTML markup and structured data to help LLMs understand your content. Schema markup is particularly powerful here, it helps LLMs understand not just the content itself, but its context and relationships. And most importantly, write for humans first, LLMs are getting better at identifying and preferring content that provides genuine value to readers.

This shift in content discovery mechanisms represents both a challenge and an opportunity. While it may require rethinking some traditional SEO practices, it also means that truly valuable content has a better chance of being surfaced, regardless of the domain’s historical authority or backlink profile. The key is to focus on creating high-quality, informative content while ensuring your technical implementation doesn’t create barriers to LLM discovery.

Zero-Click Responses: The New Normal

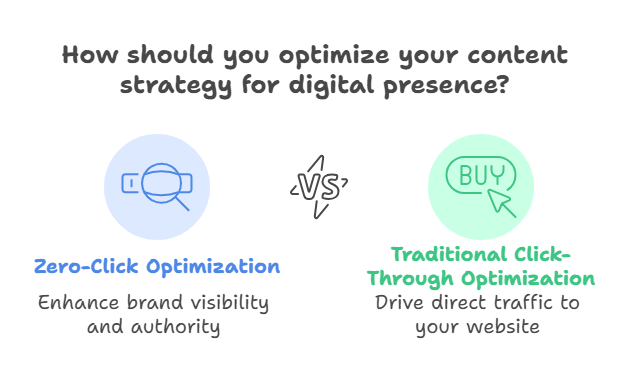

Many SEO professionals panic when they hear the term “zero-click”, after all, isn’t the whole point to drive traffic to your website? But in the world of LLM optimization, zero-click responses aren’t just inevitable, they’re actually an opportunity to expand your brand’s reach and authority.

Here’s why: When an LLM quotes your content directly in its response, you’re essentially getting prime placement in what we might call “position zero” of the AI response. Think about it like this, when Google features your content in a featured snippet, you might lose some clicks, but you gain massive visibility and authority. The same principle applies to LLM responses, but with even greater potential for brand recognition.

The key difference lies in how LLMs attribute information. Unlike traditional search engines, where users might skim past your brand name in a featured snippet, LLMs often explicitly state their sources: “According to [Your Brand]…” or “As explained by [Your Company]…” This attribution becomes part of the conversation, embedding your brand directly into the user’s information-seeking experience.

What’s particularly interesting is how this changes the game for content strategy. Instead of optimizing solely for clicks, we need to optimize for quotability and authority. Your content needs to be structured in a way that makes it easy for LLMs to extract and reference key information while maintaining your brand voice and expertise.

Here’s what I’ve found works particularly well:

- Clear, definitive statements that can stand alone as quotes

- Numerical data and statistics presented in a straightforward format

- Step-by-step processes or methodologies that can be easily referenced

- Unique insights or perspectives that differentiate your brand

- Concise definitions or explanations of complex topics

The format of your content becomes crucial here. Using proper heading structure, bulleted lists, and especially schema markup helps LLMs understand which parts of your content are most quote-worthy. FAQ schema, for instance, has proven particularly effective in getting content featured in LLM responses.

But here’s the most interesting part: zero-click responses in LLMs often lead to deeper engagement down the line. When users see your brand consistently cited as an authority across multiple AI interactions, they’re more likely to seek out your content directly for more comprehensive information. It’s a long-term authority play that actually builds trust more effectively than traditional click-through traffic.

This doesn’t mean you should abandon traditional traffic-driving strategies. Instead, think of LLM optimization as an additional layer in your digital presence. Your content should be designed to work on multiple levels: providing quick, quotable insights for AI responses while maintaining depth and value for direct visitors.

What makes this approach particularly powerful is its compound effect. Every time an LLM quotes your content, it’s not just a one-time exposure, it becomes part of the ongoing conversation between AI and users. Your brand becomes woven into the fabric of how people understand topics in your space, creating a new kind of digital authority that extends beyond traditional SEO metrics.

Technical Optimization for LLM Visibility

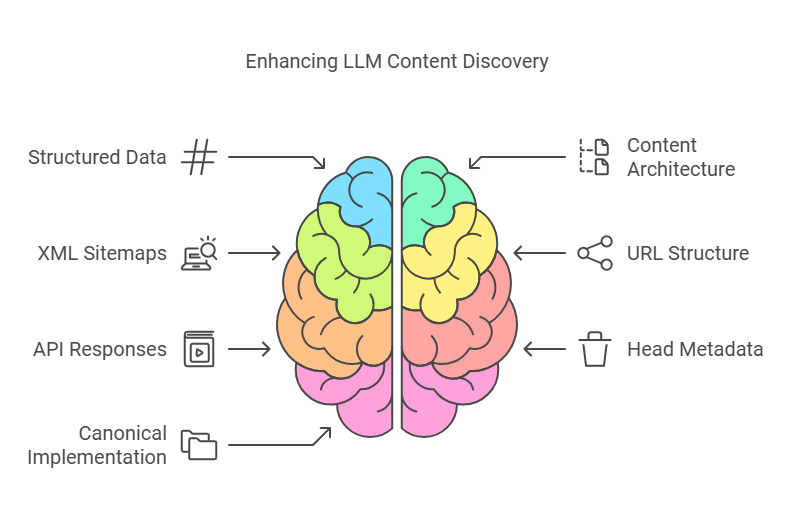

The technical aspects of optimizing for LLMs require a fundamentally different approach than traditional SEO. While we’ve discussed JavaScript rendering challenges earlier, there are several other crucial technical considerations that affect how LLMs discover and process your content.

Let’s start with structured data implementation. While schema markup has been important for traditional SEO, it takes on new significance with LLMs. These AI models use structured data to understand not just what your content says, but what it means in a broader context.

Key considerations with schema include:

- Implementing schema markup that clarifies content relationships

- Using nested schema structures to show content hierarchy

- Ensuring schema validates and accurately represents your content

- Utilizing multiple schema types where appropriate (Article + Author + Organization)

- Including temporal and contextual signals in your schema

Content architecture becomes particularly important for LLM comprehension. Unlike traditional search engines that might piece together content from various page elements, LLMs tend to process content more linearly.

What this means for content architecture:

- Organizing content in a logical, hierarchical structure

- Using clear section demarcations with semantic HTML

- Implementing proper HTML5 sectioning elements (article, section, aside)

- Ensuring content flows naturally and maintains context

- Creating clear topical clusters through internal linking

Technical XML sitemaps take on new importance for LLM crawling, consider:

- Including clear content type signals

- Adding update frequency information

- Implementing separate sitemaps for different content types

- Using news sitemaps for time-sensitive content

- Including image and video signals where relevant

URL structure and information architecture need special attention:

- Create logical content hierarchies reflected in URLs

- Implement clear categorization systems

- Use descriptive URLs that convey content meaning

- Maintain consistent URL patterns across content types

- Consider implementing topic clusters in URL structure

API responses and content delivery need to be optimized for LLM consumption:

- Implement proper content versioning

- Ensure consistent metadata delivery

- Structure API responses logically

- Include all necessary contextual information

- Maintain clear content relationships in API structure

Head metadata remains crucial but needs adaptation for LLMs:

- Implement clear title hierarchies

- Use descriptive meta descriptions

- Include relevant OpenGraph tags

- Add appropriate Twitter card metadata

- Maintain consistent meta tag structures

Canonical implementation becomes even more important:

- Establish clear content hierarchies

- Handle pagination properly

- Manage duplicate content effectively

- Implement cross-domain canonicals where needed

- Address content syndication properly

Remember: technical optimization for LLMs isn’t about tricking the system, it’s about making your content as accessible and understandable as possible. Focus on clean, semantic markup, proper content structure, and ensuring your content relationships are clearly defined through appropriate technical implementations.

Content Creation Strategies for LLM Optimization

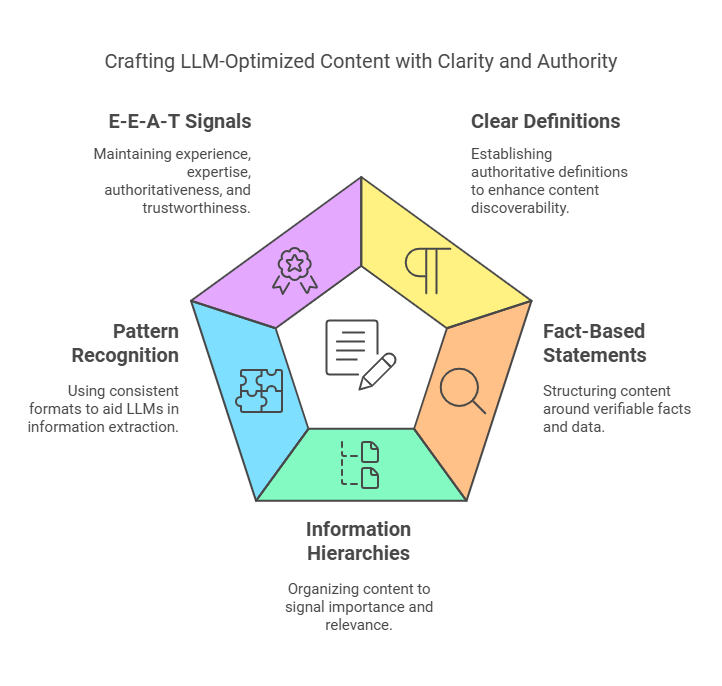

Creating content that resonates with both LLMs and human readers requires a delicate balance. The foundation of LLM-friendly content lies in clear information hierarchy and comprehensive coverage. LLMs excel at understanding contextual relationships and identifying authoritative content.

Here’s what I’ve found works particularly well:

Establish Clear Definitions:

LLMs frequently pull definitions and explanations from source content. Start key sections with clear, authoritative definitions of important concepts. For example, instead of gradually explaining a topic, lead with “X is defined as…” or “X refers to…” statements. This makes your content more likely to be quoted when users ask definitional questions.

Use Fact-Based Statements:

LLMs prioritize factual, verifiable information. Structure your content around clear statements that can be easily verified:

- Include specific statistics with dates and sources

- Reference industry standards and definitions

- Cite academic research and studies

- Provide concrete examples and use cases

- Include quantifiable metrics and measurements

Create Clear Information Hierarchies:

Content structure signals importance to LLMs. Organize your content with clear hierarchical relationships:

- Use proper heading structure (H1 through H6)

- Group related concepts together

- Start with broad concepts before diving into specifics

- Use transition sentences to connect ideas

- Maintain logical progression of topics

Implement Pattern Recognition-Friendly Formats:

LLMs are excellent at recognizing and extracting information from consistent patterns. Structure recurring information in consistent formats:

- Use consistent formatting for similar types of information

- Create standardized templates for recurring content types

- Maintain consistent section ordering across similar pages

- Use parallel structure in lists and bullet points

- Format data presentations consistently

Focus on Comprehensive Coverage:

LLMs favor content that provides complete information about a topic. This doesn’t mean writing longer content, but rather ensuring thorough coverage:

- Address common questions and concerns

- Include relevant context and background

- Explain relationships between concepts

- Cover both basic and advanced aspects

- Provide practical applications and examples

Maintain E-E-A-T Signals:

Experience, Expertise, Authoritativeness, and Trustworthiness remain crucial for LLM optimization:

- Clearly establish author expertise

- Include relevant credentials and experience

- Reference authoritative sources

- Provide evidence for claims

- Update content regularly with new information

Remember Entity Relationships:

LLMs are particularly good at understanding relationships between entities. Make these relationships explicit in your content:

- Define clear connections between related concepts

- Use consistent terminology for entities

- Explain cause-and-effect relationships

- Highlight industry-standard classifications

- Map relationships between different topics

Consider Question-Answer Format:

LLMs often pull content to answer specific questions. Structure some content in Q&A format:

- Include common questions as headings

- Provide direct, clear answers

- Use FAQ schema markup

- Address follow-up questions

- Group related questions together

The key is creating content that serves both human readers and AI systems without sacrificing quality for either. Focus on clarity, comprehensiveness, and logical structure while maintaining an engaging, readable style for your human audience.

Ethical Considerations and Future Trends

The emergence of LLMs as information gatekeepers presents both opportunities and ethical challenges for content creators and SEO professionals. While traditional search engines have developed sophisticated systems to combat manipulation, LLMs are still catching up, creating a landscape that’s ripe for both innovation and potential abuse.

The Return of Cloaking?

One of the most interesting developments is the potential return of cloaking, as mentioned by Kevin Indig in his predictions for 2025. Will it be beneficial to show different content to crawlers versus users? Unlike Google, which actively penalizes this practice, current LLMs don’t have robust systems to detect and penalize content manipulation. This creates an interesting dilemma:

- LLMs might actually prefer seeing structured, data-rich versions of content

- There’s currently no technical penalty for serving different versions

- This could lead to better information extraction and references

- However, it raises ethical concerns about content authenticity

- The long-term sustainability of such practices is questionable

Quality Signals in LLMs:

The way LLMs evaluate content quality differs significantly from traditional search engines:

- Traditional link-based authority metrics matter less

- Content coherence and internal consistency matter more

- Factual accuracy can be harder to verify

- Context and relationships between concepts are crucial

- Standard quality metrics might not apply

This shift in quality evaluation creates new challenges:

- How do we verify the accuracy of information?

- What constitutes authority in an LLM context?

- How can we maintain content integrity?

- What role does traditional E-E-A-T play?

- How do we balance optimization with authenticity?

Emerging Verification Methods

As LLMs evolve, new content verification methods are emerging:

- AI-powered fact-checking systems

- Cross-reference verification mechanisms

- Source authenticity verification

- Content consistency checking

- Temporal validation of information

Future Considerations:

Looking ahead, several trends are likely to shape LLM optimization:

- Development of LLM-specific quality metrics

- Evolution of content authentication systems

- Integration of blockchain for content verification

- Real-time content updating mechanisms

- Enhanced source attribution systems

The most crucial consideration is sustainability. While certain optimization techniques might work today, the landscape is rapidly evolving. Focusing on authentic, valuable content remains the safest long-term strategy.

Key Questions for the Future:

- Will LLMs develop more sophisticated anti-manipulation systems?

- How will content attribution evolve?

- What role will real-time updates play?

- How will user feedback influence LLM rankings?

- What new optimization techniques will emerge?

Looking ahead, we’re likely to see:

- More sophisticated content verification systems

- Better integration between traditional search and LLMs

- Enhanced methods for detecting manipulated content

- More emphasis on real-time information updates

- Stronger focus on content authenticity

The Path Forward

As we navigate this evolving landscape, maintaining ethical standards while adapting to new technologies will be crucial. The goal should be to optimize content in ways that enhance its value for both LLMs and users, rather than seeking short-term advantages through manipulation.

Remember: while certain aggressive optimization techniques might work now, investing in high-quality, authentic content remains the most sustainable long-term strategy. The future of LLM optimization lies not in manipulation, but in creating content that genuinely serves user needs while being efficiently processable by AI systems.

I’m an SEO and performance marketing leader who loves breaking down complex strategies into clear, actionable insights. I have driven growth for reputable brands such as SAP, Four Seasons, BioMarin Pharmaceutical, and Rosewood Hotels in SEO and Performance Marketing strategy.